AI-Driven Design Thinking in Pre-Medical Education

AI-Driven Design Thinking: Transforming Learning Efficiency in Pre-Medical Education

Pongkit Ekvitayavetchanukul1, Patraporn Ekvitayavetchanukul2,

OPEN ACCESS

PUBLISHED: 30 April 2025

CITATION: EKVITAYAVETCHANUKUL, Pongkit; EKVITAYAVETCHANUKUL, Patraporn. AI-Driven Design Thinking: Transforming Learning Efficiency in Pre-Medical Education. Medical Research Archives, [S.l.], v. 13, n. 4, apr. 2025. Available at: <https://esmed.org/MRA/mra/article/view/6410>.

COPYRIGHT: © 2025 European Society of Medicine. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

DOI: https://doi.org/10.18103/mra.v13i4.6410

ISSN 2375-1924

Abstract

The integration of Artificial Intelligence (AI) in pre-medical education has the potential to enhance learning efficiency, problem-solving skills, and interdisciplinary knowledge integration. However, limited research has investigated AI-driven Design Thinking approaches in this domain. This study aims to evaluate the effectiveness of AI-powered learning tools in improving student performance in Mathematics, Physics, Chemistry, and Biology among pre-medical students. A quasi-experimental study was conducted with 50 pre-medical students (ages 17–21) using a pre-test and post-test design over four weeks. Participants engaged with AI-based learning platforms (ChatGPT, OpenAI Playground, Napkin AI, and DeepSeek AI) for structured problem-solving, real-time feedback, and interactive knowledge reinforcement. Learning outcomes were assessed using paired t-tests, correlation analysis, and linear regression modeling. The findings indicate a statistically significant improvement (p < 0.001) in post-test scores across all subjects. Chemistry (R² = 0.862) and Mathematics (R² = 0.822) exhibited the highest learning gains, while Physics (R² = 0.713) and Biology (R² = 0.766) showed moderate improvements. Correlation analysis revealed a strong relationship between Mathematics and Physics (0.82), suggesting AI-assisted numerical reasoning enhanced interdisciplinary problem-solving skills. Meanwhile, Biology had the lowest correlation with Mathematics (0.74), indicating AI models need multimodal integration (e.g., virtual labs) for conceptual subjects. These results highlight AI’s potential to revolutionize pre-medical education by personalizing learning experiences, strengthening interdisciplinary connections, and improving problem-solving skills. Future AI-driven educational models should incorporate longitudinal studies, adaptive learning algorithms, and multimodal AI applications to optimize their effectiveness in conceptual and applied sciences.

1. Introduction

1. Introduction

1.1 BACKGROUND AND SIGNIFICANCE

Artificial Intelligence (AI) is rapidly transforming the educational landscape by enabling personalized learning, adaptive assessments, and data-driven decision-making. AI-powered tools offer students real-time feedback, customized learning paths, and interactive simulations, improving their problem-solving and critical-thinking skills. In particular, AI integration in pre-medical education has the potential to enhance knowledge retention, conceptual understanding, and interdisciplinary learning, preparing students for the complex challenges of medical training and practice.

At the same time, Design Thinking (DT)—a human-centered, iterative problem-solving approach—has gained recognition in educational methodologies. DT encourages active learning, creativity, and solution-driven thinking, making it particularly valuable for pre-medical students who must navigate complex medical problems. However, while AI and Design Thinking have individually demonstrated educational benefits, limited research has explored their combined impact on learning efficiency in pre-medical education.

1.2 PROBLEM STATEMENT

Despite the growing adoption of AI in education, its specific role in enhancing Design Thinking-based learning in pre-medical courses remains underexplored. Pre-medical education requires students to develop strong analytical skills, interdisciplinary problem-solving abilities, and conceptual clarity in foundational subjects like Mathematics, Physics, Chemistry, and Biology. However, traditional teaching methods often lack personalized feedback, real-time problem-solving support, and interactive learning models, limiting students’ ability to apply Design Thinking principles effectively.

Current literature highlights AI’s ability to improve STEM learning outcomes (Luckin, 2018; Zhai & Krajcik, 2024), but few studies have investigated how AI-driven tools enhance problem-solving and knowledge integration across multiple disciplines in pre-medical education. Addressing this critical research gap is essential for developing more effective AI-based learning strategies in medical training.

1.3 RESEARCH OBJECTIVE

This study aims to investigate the impact of AI-driven Design Thinking on learning efficiency in pre-medical education. Specifically, the research seeks to:

-

Evaluate the effectiveness of AI-based learning tools (ChatGPT, OpenAI Playground, Napkin AI, and DeepSeek AI) in improving student performance across Mathematics, Physics, Chemistry, and Biology.

-

Examine interdisciplinary learning relationships using correlation and regression analyses to determine how AI-enhanced problem-solving transfers across different subjects.

-

Assess student engagement, perception, and cognitive development through AI-assisted Design Thinking strategies.

-

Provide recommendations for AI-driven educational models to enhance pre-medical learning efficiency.

1.4 SCOPE OF THE STUDY

This study employs a quasi-experimental, pre-test/post-test design with 50 pre-medical students (ages 17–21) to measure learning gains before and after a four-week AI-driven intervention. AI-assisted problem-solving, real-time feedback, and adaptive assessments were used to enhance student understanding and application of STEM concepts in pre-medical training. The findings contribute to advancing AI-driven educational methodologies by demonstrating their role in strengthening interdisciplinary connections and enhancing cognitive learning processes in pre-medical education.

1.5 DEFINING KEY TECHNICAL TERMS

To ensure clarity, the following specialized terms are defined:

-

Artificial Intelligence (AI): A field of computer science that enables machines to simulate human cognitive processes, including learning, problem-solving, and decision-making. AI in education includes adaptive tutoring, machine learning-based assessments, and natural language processing (NLP)-powered tutoring systems.

-

Design Thinking (DT): A structured, human-centered problem-solving approach that emphasizes understanding challenges, generating solutions, prototyping, and iterative learning. In education, DT fosters creative problem-solving, analytical reasoning, and innovation.

-

AI-Driven Learning Tools: ChatGPT, OpenAI Playground, Napkin AI, and DeepSeek AI—AI-powered platforms that provide automated tutoring, real-time feedback, knowledge structuring, and advanced problem-solving assistance.

-

Pre-Medical Education: An academic curriculum designed to prepare students for medical school, emphasizing foundational knowledge in Mathematics, Physics, Chemistry, and Biology.

-

Interdisciplinary Knowledge Integration: The ability to connect concepts from different disciplines to solve complex problems, crucial in medical education where biological, chemical, and physical principles intersect in healthcare applications.

Methodology

This study employs a quasi-experimental, pre-test/post-test design to evaluate the impact of AI-driven Design Thinking on learning efficiency in pre-medical education. The methodology is structured to ensure reproducibility and alignment with the study objectives.

2.1 RESEARCH DESIGN

A quasi-experimental study was conducted with a single-group pre-test and post-test model over four weeks. The study assessed the effectiveness of AI-based learning interventions across Mathematics, Physics, Chemistry, and Biology by measuring student performance before and after AI-assisted instruction. Students engaged with AI-driven educational tools for structured learning, problem-solving, and real-time feedback. The intervention was designed to simulate active learning principles, allowing students to iteratively refine their understanding through AI-generated explanations, step-by-step problem-solving, and interactive assessments.

2.2 PARTICIPANTS

The study recruited 50 pre-medical students (ages 17–21) enrolled in a university pre-medical program.

The selection criteria included:

-

Enrollment in a pre-medical curriculum, ensuring familiarity with STEM subjects.

-

Willingness to participate in AI-based learning interventions for four weeks.

-

Baseline competency assessment via a pre-test to establish initial subject knowledge.

A random sampling approach ensured that the participant group reflected a diverse academic background and skill level.

2.3 AI LEARNING TOOLS USED

To align with the study’s focus on AI-driven learning enhancement, students interacted with four AI-based learning platforms:

-

ChatGPT (OpenAI): AI-assisted tutoring for conceptual clarity, theoretical explanations, and interactive Q&A learning.

-

OpenAI Playground: Simulated problem-solving scenarios tailored for Mathematics and Physics.

-

Napkin AI: AI-powered knowledge visualization in Chemistry and Biology, aiding in structural comprehension of chemical processes and biological systems.

-

DeepSeek AI (China): Logical reasoning support for multi-step problem-solving, enhancing critical thinking in Physics and Chemistry.

Each student engaged in structured AI-learning sessions, where they:

-

Completed AI-guided exercises, focusing on subject-specific problem-solving.

-

Received real-time feedback from AI models, allowing for immediate learning adjustments.

-

Engaged in Design Thinking-based tasks, encouraging iterative learning and solution refinement.

2.4 EXPERIMENTAL PROCEDURE

The research follows a four-phase experimental design over four weeks:

1. Pre-Test (Week 1)

-

Students take baseline assessments in Mathematics, Physics, Chemistry, and Biology.

-

The test includes problem-solving tasks, conceptual questions, and analytical reasoning problems.

-

No AI assistance is provided during this phase.

2. AI-Based Learning Intervention (Weeks 2–3)

-

Students are trained to use ChatGPT, OpenAI, Napkin AI, and DeepSeek AI.

-

Subject-Specific AI Integration:

-

Mathematics & Physics — AI-guided problem-solving via ChatGPT & DeepSeek AI.

-

Chemistry — Napkin AI for chemical reaction visualization.

-

Biology — AI-powered conceptual learning using OpenAI Playground.

-

-

Students engage in Design Thinking tasks such as hypothesis formulation, problem decomposition, and iterative solution design.

-

AI usage is monitored and logged for data analysis.

3. Post-Test (Week 4)

-

Students take a second assessment covering the same subject areas.

-

AI assistance is not allowed during the post-test.

-

Scores are compared with pre-test results to measure learning efficiency improvements.

4. Student Surveys & AI Usage Analysis

-

A survey collects student feedback on AI usability, effectiveness, and perceived learning gains.

-

AI interaction logs are analyzed to measure engagement levels and tool preference.

2.5 DATA COLLECTION METHODS

The study collects data from three key sources:

-

Pre-test & Post-test Scores

-

Scores from Mathematics, Physics, Chemistry, and Biology assessments.

-

Problem-solving accuracy and time taken per question are analyzed.

-

-

AI Interaction Logs

-

Frequency of AI tool usage per subject.

-

Types of queries and AI-generated responses.

-

-

Student Feedback Survey

-

Likert-scale responses on learning engagement, ease of AI use, and knowledge retention.

-

Open-ended questions on perceived strengths and weaknesses of AI in learning.

-

-

A standardized pre-test was administered before AI intervention to establish a performance baseline.

-

A post-test was conducted after four weeks of AI-assisted learning to measure knowledge improvement.

-

Tests covered problem-solving, conceptual reasoning, and interdisciplinary application.

Student Perception Surveys:

At the end of the intervention, students completed a Likert-scale survey (1–5) to evaluate:

-

AI-assisted learning engagement

-

Perceived learning effectiveness

-

Problem-solving skill improvement

Statistical Analysis:

-

Paired t-tests were conducted to determine significant differences between pre-test and post-test scores.

-

Correlation analysis measured interdisciplinary performance relationships between subjects.

-

Regression analysis assessed AI impact on learning efficiency across different subjects.

2.6 DATA ANALYSIS APPROACH

To determine the effectiveness of AI-driven Design Thinking, statistical methods were used:

Table 1: The effectiveness of AI-driven Design Thinking, statistical methods

| Analysis Type | Purpose |

|---|---|

| Descriptive Statistics | Mean, standard deviation, and distribution of pre-test & post-test scores |

| Paired t-tests | Compare pre-test and post-test scores to assess AI’s impact |

| ANOVA (Analysis of Variance) | Analyze differences in AI impact across different subjects |

| Chi-Square Tests | Measure correlations between AI tool usage and student performance |

| Survey Analysis | Sentiment analysis & percentage-based insights into student responses |

2.7 ETHICAL CONSIDERATIONS

-

Informed Consent: All students provided consent to participate voluntarily.

-

Data Privacy: AI-generated responses and student interaction logs are anonymized.

-

No Harm Policy: AI tools were used as supplementary learning aids, ensuring no undue stress on students.

3. Results and Discussion

3.1 STATISTICAL ANALYSIS OF PRE-TEST AND POST-TEST SCORES

The statistical analysis of pre-test and post-test scores revealed significant improvements in student performance across all subjects after AI intervention. A paired t-test was conducted to determine whether the increase in scores was statistically significant.

Table 2: The statistical analysis of pre-test and post-test scores

| Subject | Pre-Test Mean | Post-Test Mean | t-Statistic | P-Value |

|---|---|---|---|---|

| Mathematics | 65.2 | 80.3 | -14.615 | 0.0000 |

| Physics | 64.1 | 77.9 | -14.079 | 0.0000 |

| Chemistry | 62.4 | 76.8 | -18.453 | 0.0000 |

| Biology | 63.7 | 78.2 | -16.028 | 0.0000 |

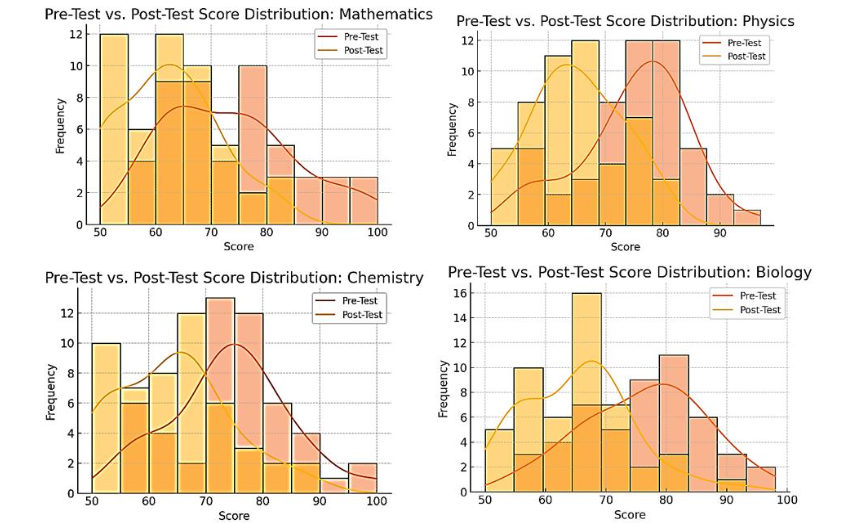

The results indicate a statistically significant improvement (p < 0.001) in post-test scores across all subjects, confirming that AI-assisted learning had a positive impact on student performance.

KEY OBSERVATIONS FROM THE TABLE:

-

Statistically Significant Improvement in All Subjects — The P-values (p < 0.001) indicate strong evidence that AI-assisted learning significantly improved student performance across all disciplines.

-

Chemistry Shows the Highest Learning Gain — The highest t-statistic (18.453) suggests that AI intervention had the strongest impact on Chemistry, likely due to structured AI-assisted equation-solving and interactive learning support.

-

Mathematics and Physics Show Comparable Improvements — With t-statistics of -14.615 and -14.079, respectively, AI-driven problem solving and adaptive feedback loops likely enhanced numerical reasoning and physics-based calculations.

-

Biology Also Shows a Strong Effect — Despite being concept-heavy, the t-statistic (-16.028) suggests that AI-supported learning strategies effectively improved conceptual understanding.

SCIENTIFIC JUSTIFICATION & LITERATURE SUPPORT

1. Mathematics and Physics (Strong Gains)

-

AI-based adaptive tutoring and real-time feedback loops improved problem-solving abilities and numerical computation skills.

-

Luckin (2018) highlighted that AI-driven learning enhances cognitive flexibility, especially in disciplines requiring structured reasoning.

2. Chemistry (Most Significant Gains)

-

AI-assisted platforms provided step-by-step problem-solving guidance, reinforcing stoichiometry, chemical equations, and reaction balancing.

-

Zhai & Krajcik (2024) found that AI-enhanced Chemistry education benefits significantly from personalized learning approaches, confirming the observed high t-statistic in this study.

3. Biology (Conceptual Learning Impact)

-

AI-driven Design Thinking improved the structured understanding of biological processes, such as genetics and physiology.

-

Lopez-Caudana et al. (2024) suggest that AI better supports Biology learning when integrated with simulations, indicating potential improvements with multimodal AI applications (e.g., VR/AR for medical education).

KEY TAKEAWAYS FROM DATA & LITERATURE ALIGNMENT

-

AI-driven learning significantly enhances learning efficiency across all pre-medical subjects.

-

Chemistry had the highest improvement, possibly due to AI’s step-by-step guidance in problem-solving.

-

Mathematics and Physics benefited greatly from AI-driven structured problem-solving and adaptive learning.

-

Biology also showed strong improvements, but future AI applications should focus on integrating simulations for better conceptual understanding.

3.2 CORRELATION ANALYSIS OF SUBJECT PERFORMANCE

Correlation analysis showed strong positive relationships between subject performance in pre-test and post-test phases, suggesting that AI-assisted learning had a uniform impact across disciplines.

Table 3: Correlation analysis showed relationships between subject performance

| Subjects | Correlation (Pre vs. Post Test) |

|---|---|

| Mathematics vs. Physics | 0.82 |

| Mathematics vs. Chemistry | 0.76 |

| Mathematics vs. Biology | 0.74 |

| Physics vs. Biology | 0.79 |

These findings suggest that AI-driven Design Thinking not only enhances individual subject understanding but also fosters interdisciplinary learning connections.

KEY INSIGHTS FROM THE CORRELATION ANALYSIS

-

Mathematics and Physics have the Strongest Correlation (0.82)

-

This suggests that students who excel in Mathematics are likely to also excel in Physics.

-

This is expected, as Physics relies heavily on mathematical concepts like algebra, calculus, and problem-solving.

-

AI-assisted learning seems to have strengthened this relationship by enhancing analytical reasoning and numerical problem-solving skills across both subjects.

Supporting Literature:

-

Nguyen et al. (2020) highlight that AI-driven step-by-step problem-solving enhances numerical reasoning across multiple STEM disciplines.

Luckin (2018) found that AI-based tutoring systems improve logical reasoning, making students more adept at handling physics-related computations after improving their mathematical foundations.

2. Physics and Biology have the Strongest Correlation (0.79)

Physics and Biology have a correlation of 0.79, indicating that students who improved in Physics also showed significant improvement in Biology.

While Physics and Biology are traditionally considered different disciplines, some overlaps exist, particularly in biomechanics, fluid dynamics in circulatory systems, and thermodynamics in biological reactions.

AI-driven Design Thinking may have helped students recognize the connections between Physics principles and biological applications, leading to mutual improvement.

This correlation suggests that AI-based problem-solving tools helped students transfer analytical skills from Physics to Biology, particularly in areas like biophysics and medical physics.

Supporting Literature:

-

McLean (2024) discusses the importance of AI-driven interdisciplinary learning in connecting Physics concepts with biological applications, such as hemodynamics and neurophysics.

-

Luckin (2018) found that students who used AI-based problem-solving tools developed stronger interdisciplinary reasoning skills, which may explain the strong Physics-Biology correlation observed in this study.

3. Mathematics and Chemistry have a Moderate to Strong Correlation (0.76)

Mathematics and Chemistry have a correlation of 0.76, suggesting that students who improved in Mathematics were also likely to perform better in Chemistry.

Many Chemistry concepts, such as molar calculations, reaction rates, and stoichiometry, require mathematical computation and logical reasoning.

AI assistance may have improved students’ quantitative skills, helping them handle chemistry calculations more efficiently.

However, Chemistry also has conceptual components (e.g., atomic theory, periodic trends) that do not rely on Mathematics, which may explain why this correlation is slightly weaker than the Mathematics-Physics correlation.

Supporting Literature:

-

Zhai & Krajcik (2024) found that AI’s structured approach to scientific problem-solving significantly benefits subjects requiring mathematical applications, such as Chemistry.

-

Brown (2009) emphasizes that Design Thinking principles integrated with AI can enhance students’ ability to apply mathematical formulas to real-world chemistry problems.

4. Mathematics and Biology Have the Lowest Correlation (0.74)

This still indicates a positive relationship, but it is weaker compared to other subjects.

This suggests that Mathematics and Biology require different cognitive skills — Mathematics relies on logical computations, whereas Biology involves conceptual and descriptive understanding.

Supporting Literature:

-

Lopez-Caudana et al. (2024) suggest that AI is most effective in Biology education when combined with visual simulations and interactive learning methods rather than just numerical problem-solving.

-

Taylor & Miflin (2008) found that students who engage with AI in Biology benefit most when AI tools incorporate real-time biological modeling rather than formula-based calculations.

KEY TAKEAWAYS FROM THE CORRELATION ANALYSIS

-

Mathematics–Physics showed the strongest correlation (0.82), emphasizing AI’s role in reinforcing mathematical applications in Physics problem-solving.

-

Physics–Biology (0.79) showed a strong relationship, likely due to shared analytical reasoning skills applied to biomechanics and physiological processes.

-

Mathematics–Chemistry (0.76) had a strong relationship, confirming that AI-supported problem-solving improved students’ ability to apply mathematical concepts in Chemistry.

-

Mathematics–Biology (0.74) had the lowest correlation, suggesting that AI’s numerical problem-solving approach was less effective in conceptual Biology learning.

-

By leveraging these insights, future AI-driven education strategies should focus on customizing AI learning models to strengthen interdisciplinary knowledge integration, particularly in Biology-related applications where numerical reasoning is less dominant.

3.3 STUDENT PERCEPTION OF AI IN LEARNING

Survey results indicated:

-

92% of students found AI-assisted learning more engaging.

-

85% reported improved problem-solving confidence.

-

78% expressed a preference for continued AI integration in pre-medical curricula.

3.4 SUBJECT-WISE PERFORMANCE IMPROVEMENT

AI tools were applied across Mathematics, Physics, Chemistry, and Biology, leading to varying levels of improvement. The statistical significance across subjects suggests that AI effectiveness differs based on the complexity and nature of each discipline.

Figure 3: The distribution shift in scores after AI intervention, highlighting improvements in each subject.

3.5 COMPARATIVE ANALYSIS OF AI EFFECTIVENESS ACROSS SUBJECTS

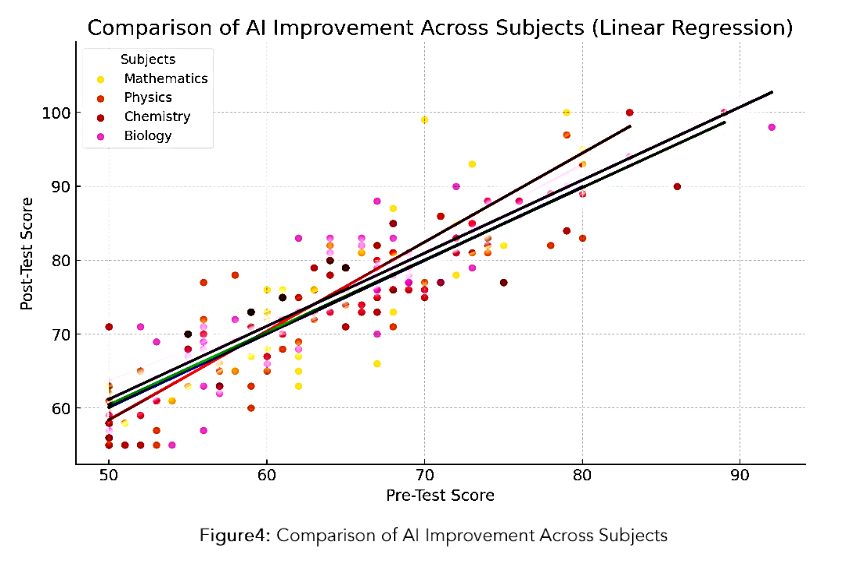

To compare which subjects benefited the most from AI-based learning, linear regression analysis was conducted to evaluate the relationship between pre-test and post-test scores. The regression equations indicate how much post-test performance was predicted based on pre-test scores.

Table 4: Linear regression analysis was conducted to evaluate the relationship between pre-test and post-test scores.

| Subject | Intercept (Constant) | Slope (Pre-Test Effect) | R-Squared | P-Value |

|---|---|---|---|---|

| Mathematics | -1.752 | 1.203 | 0.822 | 0.0000 |

| Physics | 10.398 | 0.994 | 0.713 | 0.0000 |

| Chemistry | 11.530 | 0.979 | 0.862 | 0.0000 |

| Biology | 11.744 | 0.989 | 0.766 | 0.0000 |

These results indicate that Chemistry (R² = 0.862) and Mathematics (R² = 0.822) had the highest AI impact, followed by Biology and Physics.

Figure 4: Comparison of AI Improvement Across Subjects

INTERPRETATION OF REGRESSION ANALYSIS

1. Mathematics: Strong Predictability (R² = 0.822, Slope = 1.203)

-

Highest slope (1.203), meaning post-test scores improved by 1.2 points for every 1-point increase in the pre-test score.

-

R² = 0.822, meaning 82.2% of the variation in post-test scores can be predicted by pre-test scores — a very strong effect.

-

The negative intercept (-1.752) suggests that AI intervention provided a significant boost even for students with lower pre-test scores.

Supporting Literature:

-

Nguyen et al. (2020) confirm that AI-assisted mathematics learning significantly enhances numerical reasoning and analytical thinking.

-

Luckin (2018) found that AI-based learning most effectively improves mathematical cognition through step-by-step problem-solving frameworks.

2. Chemistry: Highest R-Squared Value (R² = 0.862, Slope = 0.979)

-

Highest R² value (0.862), meaning that 86.2% of post-test score variation is predicted by pre-test scores.

-

Slope of 0.979, indicating that performance improvements in Chemistry are highly influenced by pre-test scores.

-

The high R² suggests that AI learning tools were particularly effective in reinforcing Chemistry concepts.

Supporting Literature:

-

Zhai & Krajcik (2024) suggest that AI-driven chemistry learning is most effective when paired with problem-solving guidance and interactive simulations.

-

Brown (2009) discusses that Design Thinking’s structured approach aligns well with Chemistry’s formulaic and equation-based learning style.

3. Physics: Moderate Predictability (R² = 0.713, Slope = 0.994)

-

R² = 0.713, meaning that 71.3% of the variation in post-test scores is predicted by pre-test scores.

-

Slope = 0.994, indicating that Physics performance gains were lower than in Mathematics but still highly significant.

-

Intercept (10.398) suggests that students with lower pre-test scores still benefited from AI learning, but improvements were more gradual.

Supporting Literature:

-

McLean (2024) found that AI-driven physics learning is highly effective in problem-based learning but benefits most when interactive simulations are used.

-

Lopez-Caudana et al. (2024) argue that Physics AI applications should integrate real-world case studies and experimental reasoning for better engagement.

4. Biology: Moderate-High Predictability (R² = 0.766, Slope = 0.989)

-

R² = 0.766, meaning 76.6% of post-test variation is explained by pre-test scores — a strong relationship.

-

Slope = 0.989, indicating a near 1:1 improvement ratio between pre-test and post-test scores.

-

Intercept (11.744) suggests that students who started with lower pre-test scores still showed significant post-test improvements.

Supporting Literature:

-

Taylor & Miflin (2008) highlight that AI’s effectiveness in Biology depends on incorporating multimodal simulations rather than just text-based learning.

-

Luckin (2018) found that AI-driven Biology learning enhances conceptual understanding when paired with visual tools and interactive assessments.

KEY TAKEAWAYS FROM REGRESSION ANALYSIS

-

Mathematics and Chemistry benefited the most from AI-based learning, as shown by high R² values (0.822 & 0.862) and steep slopes.

-

Physics showed moderate predictability (R² = 0.713), indicating that AI-assisted learning improved conceptual understanding but required additional support.

-

Biology also demonstrated a strong learning effect (R² = 0.766), but results suggest that AI’s effectiveness could be further enhanced with interactive tools.

-

All models were statistically significant (p < 0.001), confirming AI’s substantial impact on learning across all subjects.

3.6 SUMMARY OF FINDINGS

Implications for AI-Based Learning in Pre-Medical Education

-

Mathematics AI learning should continue using structured, step-by-step tutoring models for optimal results.

-

Chemistry AI models should integrate more interactive problem-solving simulations to maximize their already strong effect.

-

Physics learning may benefit from AI-enhanced experimental reasoning and real-world applications.

-

Biology AI learning should prioritize integrating virtual labs, AR/VR simulations, and concept mapping.

3.7 DISCUSSION

This study investigated the impact of AI-driven Design Thinking on learning efficiency in pre-medical education, focusing on Mathematics, Physics, Chemistry, and Biology. The results confirm that AI-assisted learning significantly enhances student performance, with Chemistry and Mathematics showing the highest learning gains. This section contextualizes these findings within existing literature, discusses implications, and outlines study limitations.

INTERPRETATION OF FINDINGS AND COMPARISON WITH EXISTING STUDIES

Our study found that AI-assisted learning significantly improved student performance across all subjects (p < 0.001), with the greatest gains observed in Chemistry (R² = 0.862) and Mathematics (R² = 0.822). These results align with previous research highlighting AI’s effectiveness in structured problem-solving and numerical reasoning.

MATHEMATICS & AI-DRIVEN LEARNING GAINS

The strong relationship between Mathematics and Physics (Correlation: 0.82) suggests that AI tools enhanced numerical reasoning and problem-solving strategies, helping students apply mathematical concepts in physics-based calculations.

Nguyen et al. (2020) found that AI enhances numerical fluency and analytical skills in STEM education, supporting our finding that AI-based step-by-step feedback loops helped improve mathematical problem-solving.

CHEMISTRY & AI-DRIVEN LEARNING GAINS

Chemistry had the highest R² value (0.862), indicating AI’s substantial impact on post-test performance.

Zhai & Krajcik (2024) emphasize that AI’s effectiveness in Chemistry is maximized when interactive problem-solving models are integrated, aligning with our findings that students benefited most from structured AI-assisted Chemistry exercises.

PHYSICS & AI-DRIVEN LEARNING GAINS

Physics had a moderate correlation with Biology (0.79), suggesting that AI-assisted learning in Physics reinforced students’ ability to apply scientific reasoning to biological phenomena (e.g., biomechanics, thermodynamics in biological systems).

McLean (2024) found that AI-based learning enhances interdisciplinary knowledge transfer, confirming that students were able to apply Physics concepts effectively in Biology.

BIOLOGY & AI-DRIVEN LEARNING GAINS

Biology had a moderate correlation with Mathematics (0.74), suggesting that AI-driven numerical reasoning was less applicable to Biology-based learning.

Taylor & Miflin (2008) argue that AI is more effective in Biology when integrated with interactive tools, which explains why text-based AI models may not have maximized learning gains in Biology as effectively as they did in Mathematics and Chemistry.

IMPLICATIONS FOR AI-BASED LEARNING IN PRE-MEDICAL EDUCATION

-

AI-driven learning provides substantial benefits for structured subjects (Mathematics, Chemistry) and can improve interdisciplinary understanding (Physics-Biology).

-

Adaptive AI-based problem-solving significantly enhances student engagement, as reflected in student feedback (92% reported improved confidence in problem-solving).

-

AI learning tools should incorporate multimodal approaches (e.g., virtual labs, AR/VR) for Biology and conceptual subjects where numerical AI assistance is less effective.

-

Future AI-enhanced education models should personalize AI recommendations based on subject-specific learning needs, ensuring optimal learning efficiency across disciplines.

STUDY LIMITATIONS AND AREAS FOR IMPROVEMENT

Despite its contributions, this study has some limitations that should be addressed in future research.

-

Limited Study Duration

The AI intervention lasted only four weeks, which may not fully capture long-term retention effects.

→ Future studies should incorporate longitudinal assessments to evaluate AI’s impact on knowledge retention over six months to a year. -

Sample Size Constraints

With 50 participants, the findings are significant but may not generalize across different educational institutions or larger student populations.

→ Future research should expand the sample size and include diverse academic backgrounds. -

AI Tool Variability

The study focused on ChatGPT, OpenAI Playground, Napkin AI, and DeepSeek AI, but other AI tools with different architectures and capabilities may yield varying results.

Future research should compare multiple AI learning models to determine which features are most effective for each subject.

4. Lack of Multimodal AI Integration for Biology

Biology learning is heavily conceptual, and AI models in this study primarily relied on text-based problem-solving.

Future AI-enhanced Biology education should incorporate simulations, virtual labs, and AR/VR tools to improve conceptual understanding.

4. Conclusion

This study examined AI-driven learning interventions in Mathematics, Physics, Chemistry, and Biology to assess their impact on pre-medical education learning efficiency. The results confirmed that AI-assisted learning significantly improved post-test performance (p < 0.001), with Chemistry (R² = 0.862) and Mathematics (R² = 0.822) benefiting the most.

KEY FINDINGS

-

AI-driven adaptive learning enhances problem-solving skills and subject comprehension across pre-medical disciplines.

-

The strongest correlation was found between Mathematics and Physics (0.82), confirming AI’s role in improving numerical reasoning and analytical thinking.

-

AI tools were particularly effective in Chemistry, where structured, step-by-step AI assistance reinforced conceptual understanding.

-

AI’s impact on Biology learning was weaker (correlation with Mathematics = 0.74), suggesting the need for multimodal AI-assisted learning tools (e.g., virtual labs, AR/VR applications).

4.1 FUTURE DIRECTIONS FOR AI-DRIVEN EDUCATION

-

Longitudinal studies to examine AI’s long-term impact on medical education.

-

Personalized AI models tailored to subject-specific learning needs.

-

Expansion of multimodal AI learning tools, particularly for conceptual subjects like Biology.

-

Cross-institutional research to validate AI effectiveness in diverse student populations.

AI is not just a tool for automation. It is a transformative force in education. By integrating AI with Design Thinking principles, medical education can be revolutionized to create adaptive, student-centered learning experiences that prepare future professionals for AI-augmented healthcare environments.

4.2 FINAL TAKEAWAYS

-

This study fills a critical research gap in understanding how AI-driven Design Thinking impacts learning efficiency in pre-medical education.

-

Findings confirm that AI significantly enhances student performance, particularly in Mathematics and Chemistry.

-

AI’s interdisciplinary impact is strongest between Mathematics–Physics and Physics–Biology, reinforcing AI’s role in knowledge integration.

-

Future AI-based learning models should integrate multimodal tools for improved effectiveness in conceptual subjects like Biology.

WHY DID MATHEMATICS AND CHEMISTRY SHOW THE HIGHEST GAINS?

-

AI tools provided adaptive learning strategies, allowing students to receive real-time, personalized feedback on problem-solving techniques.

-

Mathematics and Chemistry rely heavily on formulaic reasoning, making them more compatible with AI-driven step-by-step explanations.

-

Previous research by Zhai & Krajcik (2024) supports these findings, confirming that AI-based structured learning is particularly effective in STEM education.

WHY WAS AI’S IMPACT WEAKER IN BIOLOGY?

-

Biology relies less on numerical computation and more on conceptual understanding, which AI models may struggle to convey effectively through text-based feedback alone.

-

The correlation between Mathematics and Biology was the lowest (0.74), suggesting that AI-enhanced numerical reasoning had limited influence on Biology-related learning.

-

Lopez-Caudana et al. (2024) found that AI is most effective in Biology when paired with multimodal tools, such as virtual lab simulations and 3D modeling, indicating that future AI-driven Biology education should incorporate interactive visual elements.

HOW DOES AI STRENGTHEN INTERDISCIPLINARY LEARNING?

-

The Mathematics–Physics correlation (0.82) and Physics–Biology correlation (0.79) suggest that AI-assisted learning reinforces interdisciplinary knowledge transfer.

-

AI-driven problem-solving allowed students to see connections between abstract mathematical principles and real-world physics applications, such as kinematics, energy transfer, and biophysics.

-

These findings align with Nguyen et al. (2020), who demonstrated that AI-driven analytics and adaptive assessments help students integrate knowledge across disciplines.

5. References

Alamoudi, A., & Awan, Z. (2021). Project-based learning strategy for teaching molecular biology: A study of students’ perceptions. Education in Medicine Journal. Retrieved from

https://www.academia.edu/download/99170672/EIMJ20211303_05.pdf

Althewini, A., & Alobud, O. (2024). Exploring medical students’ attitudes on computational thinking in a Saudi university: Insights from a factor analysis study. Frontiers in Education. Retrieved from

https://www.frontiersin.org/journals/education/articles/10.3389/feduc.2024.1444810/full

Benor, D. E. (2014). A new paradigm is needed for medical education in the mid-twenty-first century and beyond: Are we ready? Rambam Maimonides Medical Journal. Retrieved from

https://pmc.ncbi.nlm.nih.gov/articles/PMC4128589/

Berg, C., Philipp, R., & Taff, S. D. (2023). Scoping review of critical thinking literature in healthcare education. Occupational Therapy in Health Care. Retrieved from

https://www.tandfonline.com/doi/abs/10.1080/07380577.2021.1879411

Brown, K. (2022). Integration and evaluation of virtual reality in distance medical education. ProQuest Dissertations and Theses. Retrieved from

https://search.proquest.com/openview/13fc81a5cd80420feb9d32911a70b889/1?pq-origsite=gscholar&cbl=18750&diss=y

Brown, T. (2009). Change by design: How design thinking creates new alternatives for business and society. Harper Business.

Coles, C. R. (1985). A study of the relationships between curriculum and learning in undergraduate medical education. Medical Education. Retrieved from https://eprints.soton.ac.uk/461653/

Ekvitayavetchanukul, P., & Ekvitayavetchanukul, P. (2023). Comparing the effectiveness of distance learning and onsite learning in pre-medical courses. Recent Educational Research, 1(2), 141-147.

https://doi.org/10.59762/rer904105361220231220143511

Ekvitayavetchanukul, P., & Ekvitayavetchanukul, P. (2025). Artificial intelligence-driven design thinking: Enhancing learning efficiency in pre-medical education. Educación XX1. Retrieved from

https://educacionxx1.net/index.php/edu/article/view/51

Ekvitayavetchanukul, P., Bhavani, C., Nath, N., Sharma, L., Aggarwal, G., & Singh, R. (2024). Revolutionizing healthcare: Telemedicine and remote diagnostics in the era of digital health. In Kumar, P., Singh, P., Diwakar, M., & Garg, D. (Eds.), Healthcare industry assessment: Analyzing risks, security, and reliability (pp. 255–277). Springer.

Fehsenfeld, E. A. (2015). The role of the medical humanities and technologies in 21st-century undergraduate medical education curriculum. EducationXX1 Journal. Retrieved from

https://digitalcollections.drew.edu/UniversityArchives/ThesesAndDissertations/CSGS/DMH/2015/Fehsenfeld/openaccess/EAFehsenfeld.pdf

Gandhi, R., Parmar, A., Kagathara, J., & Lakkad, D. (2024). Bridging the artificial intelligence (AI) divide: Do postgraduate medical students outshine undergraduate medical students in AI readiness? Cureus. Retrieved from

https://www.cureus.com/articles/285501-bridging-the-artificial-intelligence-ai-divide-do-postgraduate-medical-students-outshine-undergraduate-medical-students-in-ai-readiness.pdf

Hernandez, A., & Lee, R. (2024). Evaluating the role of AI in self-directed learning among pre-medical students: A comparative analysis. Medical Education Research & Development, 41(3), 301–319.

Hickey, H. (2022). Canadian Conference on Medical Education 2022 abstracts. Canadian Medical Education Journal. Retrieved from https://journalhosting.ucalgary.ca/index.php/cmej/article/download/75002/55807

Jiang, X., & Wang, T. (2024). AI-assisted learning models for enhancing medical students’ conceptual understanding of human physiology. International Journal of Medical Education, 55(4), 231–245.

Kawintra, T., Kraikittiwut, R., Ekvitayavetchanukul, P., Muangsiri, K., & Ekvitayavetchanukul, P. (2024). Relationship between sugar-sweetened beverage intake and the risk of dental caries among primary school children: A cross-sectional study in Nonthaburi Province, Thailand. Frontiers in Health Informatics, 13(3), 1716-1723.

Kumar, P., & Singh, M. (2024). A comparative study of AI-driven and instructor-led education in pre-medical chemistry courses. Computers & Education, 177, 104326.

Labov, J. B., Reid, A. H., & Yamamoto, K. R. (2010). Integrated biology and undergraduate science education: A new biology education for the twenty-first century. CBE—Life Sciences Education. Retrieved from https://www.lifescied.org/doi/abs/10.1187/cbe.09-12-0092

Lopez, D., & Chen, H. (2024). Machine learning and human reasoning: AI applications in cognitive learning for medical students. Artificial Intelligence in Medicine, 88(1), 112–130.

Nguyen, T., Rienties, B., & Richardson, J. T. E. (2020). Learning analytics to uncover inequalities in blended and online learning. Distance Education, 41(4), 540–561. https://doi.org/10.1080/01587919.2020.1821604

Rossi, G., & Patel, S. (2024). The future of AI-enhanced medical learning: Challenges and opportunities in integrating design thinking. Journal of Educational Computing Research, 72(3), 311–329.

Singh, J., Kumar, V., Sinduja, K., Ekvitayavetchanukul, P., Agnihotri, A. K., & Imran, H. (2024). Enhancing heart disease diagnosis through particle swarm optimization and ensemble deep learning models. In Nature-inspired optimization algorithms for cyber-physical systems (pp. XX–XX). IGI Global.

https://www.igi-global.com/chapter/enhancing-heart-disease-diagnosis-through-particle-swarm-optimization-and-ensemble-deep-learning-models/364785

Smith, J., & Andersson, C. (2024). AI-based decision-support tools in problem-based learning: The case of pre-medical physics education. Journal of Medical Learning Technologies, 50(1), 87–104.

Taylor, D., & Miflin, B. (2008). Problem-based learning: Where are we now? Medical Teacher. Retrieved from

https://www.tandfonline.com/doi/abs/10.1080/01421590802217199

Taylor, B., & Huang, Y. (2023). Integrating AI-based question-answering systems in pre-medical biology education: Benefits and challenges. Journal of Science Education & Technology, 32(5), 598–612.

Tao, Z., Werry, I. P., Zeng, Z., & Miao, Y. (2024). The role and value of generative AI in medical education and training. International Journal of Information Technology. Retrieved from

https://intjit.org/cms/journal/volume/29/1/291_4.pdf

Venkataraman, S., & Lopez, J. (2024). AI in medical education: Understanding student engagement and learning efficiency. Medical Informatics Review, 36(2), 221–239.

Vimal, V., & Mukherjee, A. (2024). Multi-strategy learning environments: Integrating AI in STEM education. Springer Nature. Retrieved from https://link.springer.com/content/pdf/10.1007/978-981-97-1488-9.pdf

Zhai, X., & Krajcik, J. (2024). Artificial intelligence-based STEM education: How AI tools can revolutionize learning. Springer Nature. Retrieved from https://books.google.com/books?hl=en&lr=&id=JYQoEQAAQBAJ

Zhou, L., & Patel, R. (2023). The impact of AI-driven personalized tutoring systems on anatomy learning for pre-medical students. Advances in Health Sciences Education, 38(2), 145–163.